Stop the Censorshit please, Facebook.

I'm so over seeing these Zuck-ful Silicon-Valley Control Freaks taking advice from various governments especially Facebook trying to implement it's own home-brew censorship. But that would be doing a disservice to the word censorship which is a good and valued governance believe it or not.

That's why instead I'm calling it "censorshit".

Stop the Censorshit please, Facebook.

Especially in countries outside USA, like NZ and just today, now India. It was better before you decided to dick around with this stuff. As a sign it is surely (hopefully) reaching it's crescemdo, I present this astonishing and piece made by ex-Facebook staff who were wrongfully dismissed and are taking Facebook to court.

I usually never put a full-stop in a heading but there it is, I'm steaming mad. It started happening around the COVID time, the fun old Sars-2 good times. I started to keep a folder of image clippings of utterly unbelievable shit that I seen and collected. The first one on list is the latest one, the one that put me over the edge enough to write this blog:

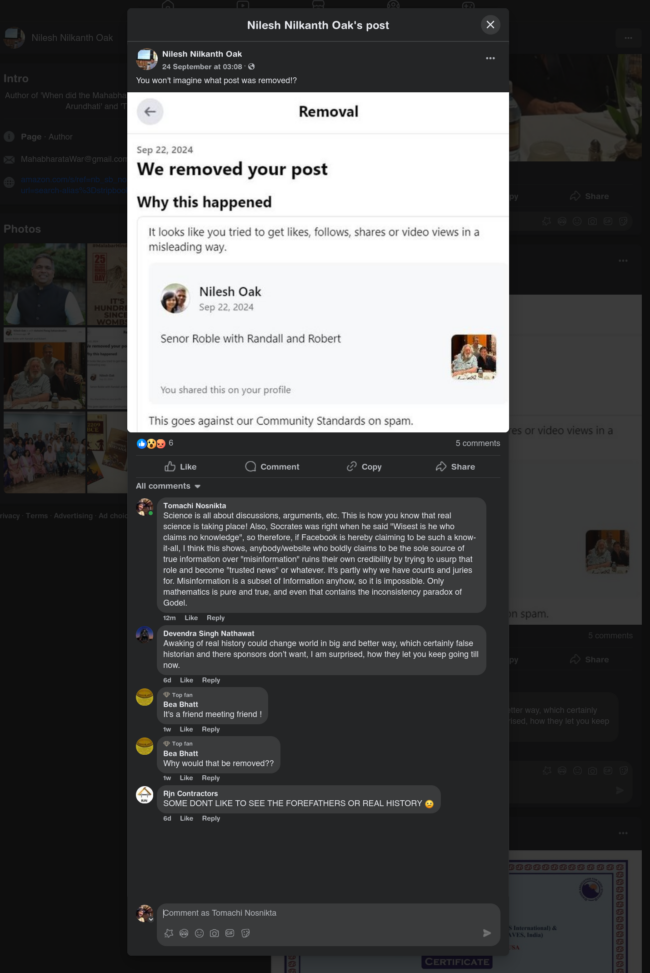

0. I was researching ancient culture of India and...

I will contact Nilesh now to ask him if wants to guest post this content below on my website instead of FB (if I like it... I haven't seen it yet):

You can see my enormous post. Hopefully Nilesh will have an interesting article and I can post this here soon. Here is my comments on his page, from me to Nilesh:

Science is all about discussions, arguments, etc. This is how you know that real science is taking place! Also, Socrates was right when he said "Wisest is he who claims no knowledge", so therefore, if Facebook is hereby claiming to be such a know-it-all, I think this shows, anybody/website who boldly claims to be the sole source of true information over "misinformation" ruins their own credibility by trying to usurp that role and become "trusted news" or whatever. It's partly why we have courts and juries for. Misinformation is a subset of Information anyhow, so it is impossible. Only mathematics is pure and true, and even that contains the inconsistency paradox of Godel.

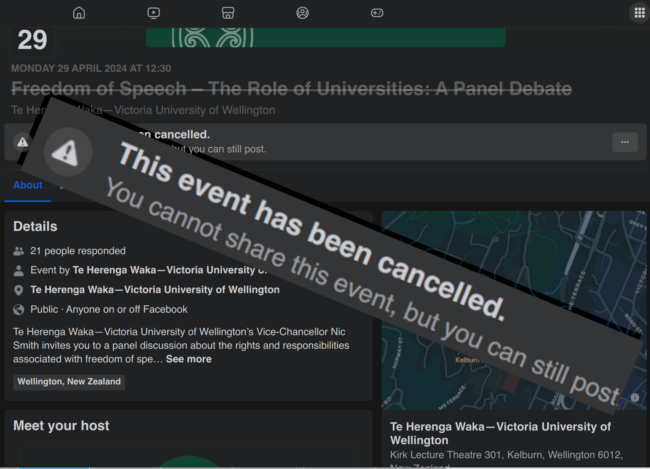

1. Freedom of Speech talk cancelled. What a shame aye. We gotta fight for this right to party.

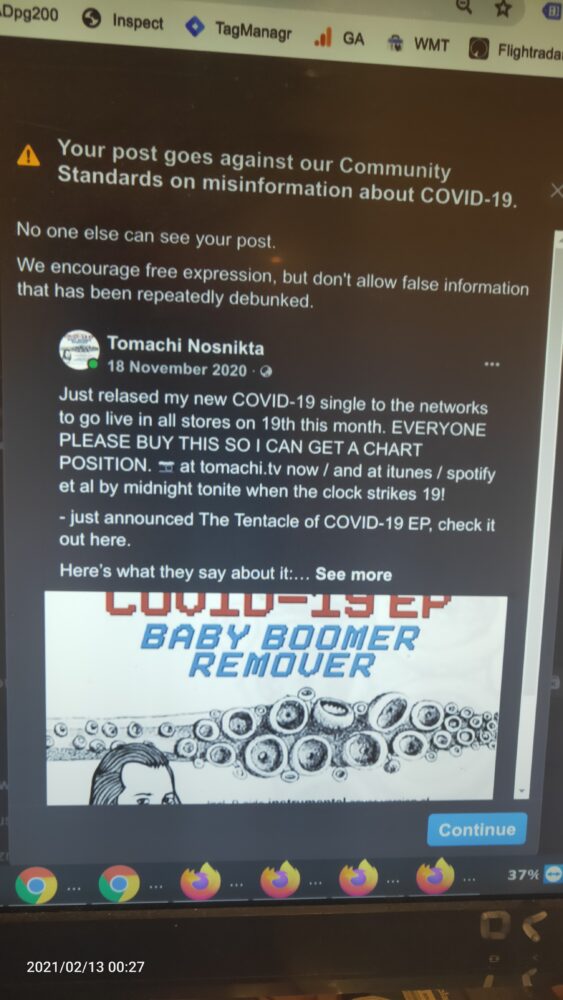

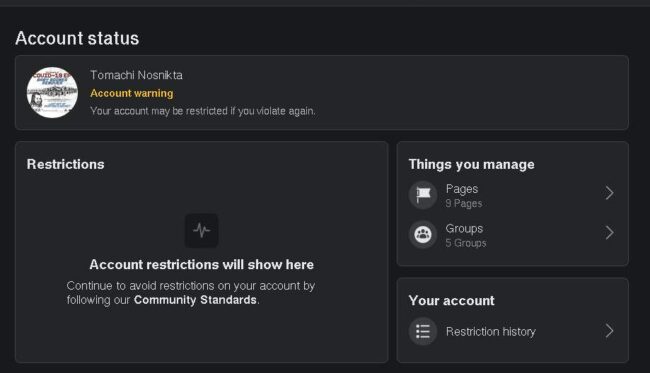

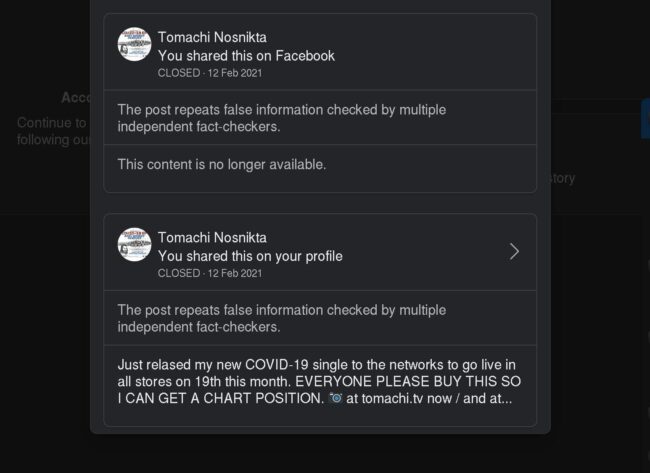

2. Facebook Community Standards: screenshot shows the reason for my blogual posturing.

This shocking image is an image that I took on the camera of another phone of the screen of my phone. I knew that since there was fuck-all that I did to make it appear, probably while trying to screen shot the fucker I would somehow make it disappear, and you know your never gonna see that again right?

"Your post goes against our Community Standards on misinformation about COVID-19. No one else can see your post. We encourage free expression, but don't allow false information that has been repeated debunked" - What you mean that part about organ harvesting from the Uyghur and Falun Gong practitioners?

We encourage free expression but don't allow false information that has been repeatedly debunked. I don't think Chinese organ harvesting has been and death vans have been debunked.

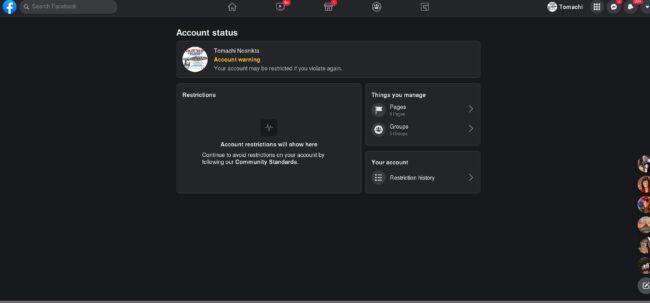

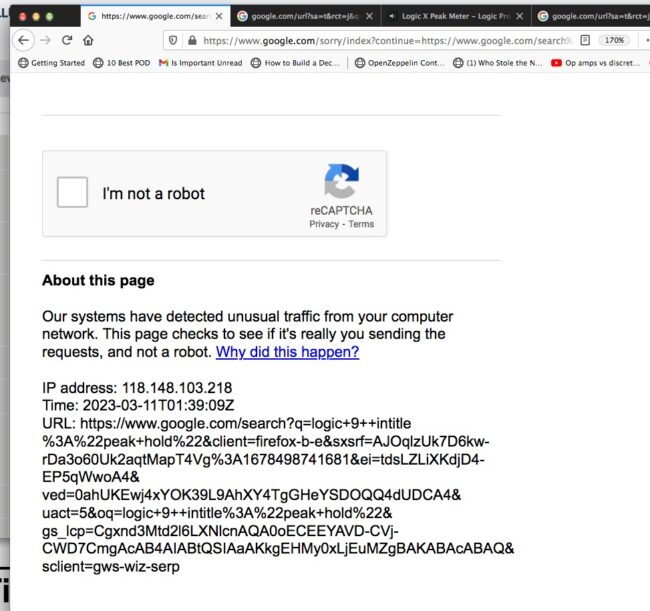

3. In a related but different matter... sometimes I go at it so hard, that Google thinks I'm a robot:

2.This terrifying but amazingly designed "modal dialog" suddenly appeared on my phone

I had no idea what I did to bring it up (if anything, I don't use this app I use DoubleTwist one of the rare apps that I paid for is no longer avail). Probably the screen went black while I thought about it.

4. I was so paranoid about the government pigs during Sars2 picnic times, that I felt the need to screenshot this price quote for a trip to OKINAWA to create a miss-direction to confuse them if they ask why I was driving about during lockdown:

Shout outs to BRYAN FROM OKINAWA! Brother do you remember me calling you and asking for you to back up my alibi that I was....... coming or going near China by the sounds of it? Why on earth did I do this?

Final Word - An Extract From A Harvard Paper on the suvject

Embracing complexity, localizing misinformation

As a field, we cannot address all the issues (see link above), particularly the systemic problems around the lack of access to data and funding or the political and economic incentives that prevent effective solutions from being implemented. However, addressing criticism related to the epistemological foundations of the field is within the control of the field itself. To move the field forward and address gaps in theories, definitions, and practices, these are some of the steps that researchers in the field can take:

- Clarify exactly what instantiation of misinformation has been studied in a given research project and the extent to which findings might—or might not—apply to different kinds of content and processes.

- Be specific about the local and context-dependent socio-technical mechanisms that enable or constrain online misinformation in that given research process to avoid technologically deterministic over-generalisations.

- Be transparent about the epistemological assumptions that motivate researchers to define a specific type of content as misleading and a population as misled.

That misinformation research needs to be more specific about what constitutes “misinformation” in a given research project is a common criticism of the field (see Williams, 2024) and the easiest to address. While misinformation is commonly described as false or misleading information, this definition can refer to many different kinds of content and can lead to the often polarizing and contradictory findings of the field. For example, Budak et al. (2024) found that the average user has minimal exposure to misinformation by measuring so-called “click-bait.” In this study, because misinformation is narrowly defined as false claims from unreliable click bait news sources, its impact is mainly limited to a small group of people. However, if misinformation is instead defined as hyperpartisan content from mainstream as well as alternative media outlets, then the effects are much larger and systemic and can lead to radicalisation or extreme polarisation (Benkler et al., 2018). Researchers should, therefore, be more explicit about what they are referring to as misinformation.

But, while necessary, being more specific about what is being researched does not address the tendency to overgeneralise findings on online misinformation and marry technologically deterministic arguments. Technological determinism is the tendency of pointing at technology to explain patterns of human behavior. The emphasis on technology, without considering the social contexts in which it operates, can result in over or underestimating the actual role of technological artifacts in our societies. Technologically deterministic arguments are present across many contemporary tech policy issues, including filter bubbles, echo chambers, algorithmic amplification, and rabbit holing, where technology is seen as the only cause of these problems.

For example, research on the spread of misinformation on social media platforms often attributes this phenomenon solely to the algorithmic recommendation systems of social media platforms. This technologically deterministic view overlooks the fact that users also play an active role in seeking out, engaging with, and sharing misinformation based on their own preferences and predispositions. While algorithms are certainly a (big) part of the problem, we need to pay attention to interactions between users and algorithms and keep in mind that misinformation can spread in spaces that are not algorithmically mediated, like encrypted chat applications.

Affordance theory can provide a more nuanced understanding of the relationship between technology and user behavior. Affordance theory is the idea that the impact of a given technology on a user’s behavior depends on 1) the design of the technology, as much as on 2) users’ predispositions, identities, and needs, as well as on 3) the temporal and spatial contexts in which the technology is used. Users cannot do an unlimited number of things with a given technology due to its design constrains although they can sometimes do things with technology for which it was not specifically designed.

In the context of online misinformation, this translates to the fact that platform users can make use of information technologies—including platform algorithms—in ways that are crafty and unexpected. We know, for example, that young users are often well aware of how recommendation algorithms work and literally train them (or attempt to) to meet their needs. Not by chance, many in the field have focused on studying media manipulation tactics, a concept that refers exactly to this aspect of misinformation: On search engines and social media platforms, users actively work to game platforms affordances, and their online behavior is not passively dictated by how the platform works (Bradshaw, 2019; Tripodi, 2022).

The other aspect to reflect upon when considering how people get misinformed online is that the internet can often be a highly participative environment, especially when it comes to collectively making sense of the world. Within communities that are organised around validating some form of knowledge (e.g., conspiracy theories but also, to stay close to academia, metascience discussions about p hacking), what constitutes “valid truth” is not imposed top down on users who simply passively believe and reshare specific pieces of content, but it is negotiated and constructed via collective efforts (Tripodi, 2018). People believe things together because they do things together.

It is then crucial that misinformation researchers spend time online embedded in the communities that they study in order to understand what values and principles motivate users to discern between acceptable and not acceptable claims about the world (Friedberg, 2020). Different communities are affected very differently by misinformation, and identifying which communities are at higher risk of being misinformed should be part of the job. For example, diaspora communities from non-Western countries living in Western countries are at a high risk of being misinformed as they are the direct target of influence operations coming from both their own and the host country (Aljizawi et al., 2022). Typically, within these communities, misinformation is not easily recognizable as it is embedded within complex narratives and mixed with verifiable information.

One of the most important points to be made is that, simply put, we make sense of the world via stories, not via single facts. This is something that misinformation researchers only recently started to grapple with, such as in Kate Starbird’s (2023) work on frames and disinformation. Starbird (2023) proposes to understand misinformation as a collective sensemaking process in which individuals select what counts as evidence not (solely) based on the quality of such evidence, but also based on pre-existing narratives. Also, within a given narrative, individuals decide what counts as evidence while interacting with each other, not in solitude. This interpretative process happens both online and offline (e.g., talking with family, friends, etc.). Key questions to be asked when researching online misinformation, then, are what stories are shaping the interpretation of online content and where do such narratives come from (Kuo & Marwick, 2021)? Which actors are trying to influence the narrative, online and offline? And how do we account for these frames in experimental settings? As Starbird (2023) notes, when frames are the key to understanding online misinformation, how do we produce and communicate “better frames” in addition to “better facts”?